Bandwidth vs Latency — 4 Vital Differences

Ever since businesses began embracing the internet, network performance has been critical. But recent trends are putting networks under more pressure than ever before.

More remote working. More cloud-based applications. The digital transformation of all aspects of society. The volume of digital interactions seems to be constantly growing. And all those digital interactions mean more data is on the move than ever - across a myriad of networks. The speed and smoothness of that data flow are critical to the user’s experience.

In this increasingly online world, whether you’re an expanding business, a consumer expecting effortless service, a data engineer setting up an oozie workflow, or a marketeer creating a webpage, network performance matters. And with expectations escalating, it will only matter more.

Bandwidth, latency, and our perception of speed

Consciously or not, we often make judgments about our digital experiences. People often refer to them as ‘fast’ or ‘slow’ (or synonyms of those terms). For example, you may be considering starting to use an internet phone in your small business, but you're unsure if your internet is fast enough for an internet phone to operate smoothly on. But what do 'fast' and 'slow' mean when being used to describe an internet connection?

Two other terms often used here are ‘bandwidth’ and ‘latency’. Unfortunately, the difference between the two terms is easy to confuse, especially if you are not a network engineer.

Bandwidth is not the same as speed. And nor is latency. While both may impact the user’s perception of speed, they tell us different things about the performance of networks.

So what are they? And how are they different?

What is bandwidth?

Bandwidth is how much data can be transferred over a network connection in a fixed time. It’s usually given in terms of ‘Megabits per second’ (Mbps) or ‘Gigabits per second’ (Gbps). The higher the bandwidth, the more data can pass through that particular connection.

Generally, the higher the bandwidth, the better, as more data can pass through the connection at any one time. Hence, the ‘width’ metaphor: more bandwidth means more data just as a ‘wider’ pipe means more water. If all other things are equal, data passes more quickly through a high broadband connection than it would a low one.

However, bandwidth is not the same as speed. Just because a pipe is wide and can fit a lot of water through in a given time, that doesn’t automatically mean water will travel that quickly through it. Likewise, bandwidth only refers to the theoretical maximum amount of data that could be pushed through a connection in any one time period. Speed on the other hand refers to how quickly data is sent or received through that connection.

Bandwidth is important. It indicates an important maximum capability of a network. But it doesn’t reveal actual performance. Different factors often mean that a network’s actual data transfer rate (what’s known as ‘throughput’) is lower.

While bandwidth has a bearing on the speed of a network, it is certainly not synonymous with its speed.

What is latency?

When we engage with a network, data is always going somewhere; that data transfer will always involve some time.

Latency is the time it takes for a data packet to travel from one point to another. For instance, it can measure the delay involved in a roundtrip between a user requesting data from a destination server and then receiving it. That roundtrip may be around the world and back again. Or it may just be to the next room and back.

Latency is usually measured in milliseconds (thousandths of a second). Generally, a good latency means below 150 ms (milliseconds). Anything over 200 ms (one-fifth of a second) is perceptible to the human brain, so the delay is felt.

Latency is a hugely important element of user experience. Having made the input, the user wants the output as soon as possible. As the digital transformation of society unfolds, such responsiveness is becoming ever more important. Even short delays can lead to stuttery, stodgy, and unresponsive performance - potentially hindering our use of technology.

A low latency, as close to zero as possible, is ideal. However, it is impossible to achieve zero latency. Ultimately, those packets of data do have to travel: that can’t be done in zero time.

Bandwidth vs. Latency: the vital differences

Bandwidth and latency are both important network metrics. To understand network performance, it’s necessary to appreciate the differences between them.

This is especially crucial for businesses offering any sort of online service or experience. For example, a startup embarking on custom mobile app development should consider how both latency and bandwidth will impact their users’ experiences of the service.

1. They measure different things

Bandwidth and latency reference different aspects of a network.

Bandwidth

As noted, bandwidth measures the maximum amount of data that might be transported through a connection in a given time frame. It is a statement of capability, not a performance measure. As a theoretical description, it ignores the many contextual factors which slow network performance down.

That’s why ‘throughput’ matters. It is a measure of how well a network is using its bandwidth, i.e. how much data is being transferred. To calculate it, the size of the transfer is divided by the time taken. It will always be lower than the theoretical, maximum bandwidth.

A speed test can reveal a network’s use of its bandwidth (e.g. its ‘throughput’). The time taken to download and upload a quantity of data can be measured. This gives the download and upload speeds. Download throughput is generally faster as more bandwidth tends to be designated for that.

Latency

Latency measures the time taken for a data packet to travel from one internet node to another.

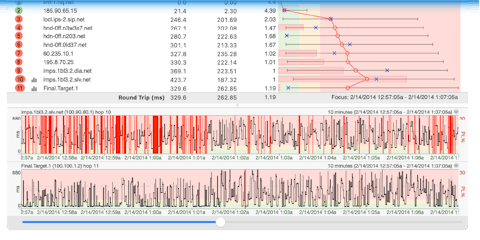

It can be measured using a ping test. This is where an empty request is sent to a server or range of servers. The time taken to receive responses from those servers is measured. That ‘ping’ time gives an idea of the latency of a network (and all the other networks involved in each request reaching its destination of course).

The above ping test was conducted in the United Kingdom. Notice how the ping varied depending on the destination server.

Ideally, a network is aiming for both a high bandwidth (e.g. the capability for a large quantity of data to be transferred per second) and a low latency (e.g. a shorter delay in sending and receiving data). In combination, these will facilitate better network performance.

2. Different factors affect them

Just as bandwidth and latency measure different aspects, different factors impact them.

Bandwidth

A network’s theoretical bandwidth is limited by various factors. For example, it is determined by internet service providers, who sell users a particular bandwidth. A user’s network bandwidth can therefore be increased by investing in more bandwidth.

But beyond that, the way a network’s bandwidth is utilized will impact performance. Bandwidth in a network is finite. A network may suffer from bandwidth saturation. That means:

- The more processes that users are running on their network, the more bandwidth is shared. This means less bandwidth for each task.

- The more background processes (e.g. system or app updates) occurring, the less bandwidth will be available for other tasks.

- The more devices running off the same internet connection, the more the bandwidth is shared.

- Settings may have been changed to limit bandwidth availability for particular applications or users.

As a result of the above, each user’s share of the network bandwidth is likely to be curtailed in some way, possibly impacting their experience.

Other factors also degrade bandwidth. There may be hardware problems; for example, the router may be experiencing problems. There may be interference from neighboring wireless networks. Or there may be faults in the cables.

Latency

Latency is affected by factors such as the following:

- Distance: The distance between the client’s computer and the server from which information is requested. In the ping test results image above, data takes longer when it has to travel further. The speed of light dictates a minimum limit for latency: data can’t travel faster than light.

- Hops: An additional delay is introduced each time the data hops from one router to another.

- Congestion: If there is a lot of traffic there may be congestion and queues, delaying the data’s journey further. Certain times of day may be busier than others, slowing data transfer.

- Nature of the request: Some tasks and applications inherently involve more latency than others. For example, while acid transactions SQL offers substantial benefits, the approach can involve higher latency: there is sometimes a trade-off.

- Other network behaviors: The client and destination machines may need to communicate before the actual data transfer can commence (adding latency). And data transfers sometimes need to start slowly which means high initial latency.

- Hardware: Hardware problems, especially at the client’s end, can slow packets down.

3. Their relative significance varies by task

Both low bandwidth and high latency can damage user experience. In either instance, applications may become slower and less stable, websites download more slowly, video playback delayed, and communication applications become jittery. But there is a difference: some activities depend more on a high bandwidth while others depend on low latency.

Bandwidth

Some services require more bandwidth than others. These require more data to be transferred per second. So, when throughput is limited, these services are likely to be especially problematic.

The image above demonstrates the varying requirements of different services. At the most extreme we have streaming 4K Ultra HD video, followed by HD video streaming more generally. Video calls are also moderately demanding. As we move to the right, the tasks become less bandwidth intensive.

With insufficient bandwidth, video streaming will suffer from slow buffering. All that data is struggling to squeeze through. Likewise, on video-calls audio and visual quality may be low. As the main bandwidth ‘hogs’, such tasks dominate bandwidth, exacerbating and suffering most from shortages.

And then, pushed too far, the impact of insufficient bandwidth can spread. Even less data-heavy tasks may be harder. Cloud-based applications, clunkier. For example, while real-time cloud collaboration on an agency contract may not inherently require much bandwidth, over-stretch may make it challenging. VoIP calls, disjointed. Gaming, disrupted. Even web browsing can become frustratingly slow.

So high bandwidth is most significant when the efficient transfer of a large quantity of data is key. It’s not necessarily as big an issue for less data-intensive tasks.

Latency

With high latency, the user will notice the delay between the input and the result. Remember, delays of 200 ms or more are noticeable to the human brain.

Low latency creates the impression of an instantaneous link between the input and output. This might be in the context of everyday life and work tasks: booking a meeting room using cloud-based facility management software; remote colleagues collaborating in real-time on documents; online gamers wanting a sense that their characters are responding instantly.

Equally, it might be more complex or high-stake tasks. For example, remote agents chatting with a customer may need immediate, real-time guidance from an AI system to resolve a complaint. Or a developer working with pyspark types. Or maybe a technician remotely controlling complex machinery.

In these examples, bandwidth is often less relevant. For instance, the amount of data for each discrete action may be relatively small, tiny even. But, in each case, low latency will be crucial.

Stable, low latency networking matters more than ever before. Our daily lives are increasingly shifting online. Cloud usage is expanding into all areas, from analyzing health stats to CRM software for businesses. Machine learning, automation, and AI are playing an ever-greater role in business and society and all require low latency.

The need for ever quicker response times is getting more pressing. It will be crucial in ensuring digital advances fit seamlessly and successfully into our lives.

4. They can be improved in different ways

There are different ways of improving bandwidth and latency.

Bandwidth

When dealing with bandwidth problems, network managers could consider the following:

- Prioritize network bandwidth for particular activities or users. For business networks, settings can be changed to prioritize vital applications and sideline others.

- Similarly, the allocation of bandwidth to facilitate downloading versus uploading can be adjusted.

- And, ultimately, more bandwidth can be purchased if necessary.

Businesses should monitor and evaluate their website’s or application’s bandwidth requirements for clients. For example, some elements of a business’s affiliate marketing landing page may be bandwidth-heavy but yield relatively little value.

Latency

As this involves the data’s entire journey from source to destination (and back again), there’s no easy way to reduce overall latency. However, ensuring a network’s hardware is working properly and is up to date can make a difference.

Choosing applications and systems that specifically feature lower latency also helps. Data engineers, for example, might bear this in mind when comparing Apache Kudu performance against HDFS.

Businesses offering online services should map the journey data to and from their servers. High latency risks can be identified. Then, the number of hops involved could be reduced or Content Delivery Networks (CDN) implemented to put key data closer to the client.

Optimize both bandwidth and latency for better performance

For the best performance, both bandwidth and latency need to be understood and optimized. Otherwise, there are likely to be certain tasks or applications where one, or both of them, hinders usability.

Pohan Lin

Senior Web Marketing and Localizations Manager, Databricks

Pohan Lin is the Senior Web Marketing and Localizations Manager at Databricks. Databricks Elasticsearch is a global Data and AI provider connecting the features of data warehouses and data lakes to create lakehouse architecture. With over 18 years of experience in web marketing, online SaaS business, and ecommerce growth. Pohan is passionate about innovation and is dedicated to communicating the significant impact data has in marketing. Pohan Lin also published articles for domains such as SME-News.

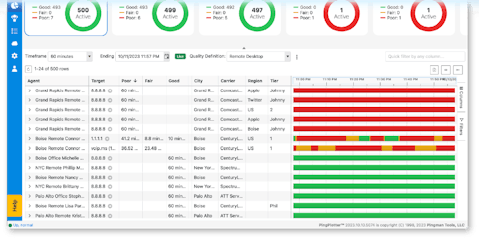

Do you support remote workers?

When remote workforces have connection trouble PingPlotter Cloud helps you find the problem and get everyone back online fast.